Product Forums

This dedicated space for users of HP Z product users to seek assistance, share insights, and collaborate with fellow members.

54 Topics

dacExplorer

asked in Workstations

ProUserOneExplorer

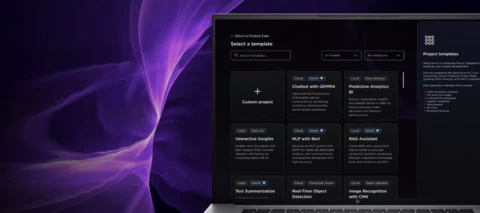

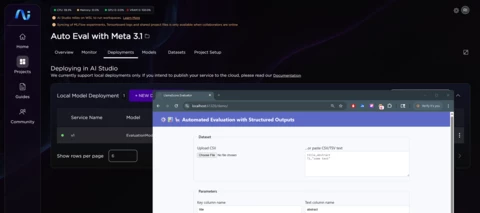

posted in HP AI Studio

miracFenceHP Z Data Science Ambassador

published in HP AI Studio

jrgosalvezCommunity Manager

published in HP AI Studio

demo_masterHP Employee

posted in HP AI Studio

jrgosalvezCommunity Manager

published in HP AI Studio

A

Anonymous

published in HP AI Studio

miracFenceHP Z Data Science Ambassador

posted in HP Z Boost

A

Anonymous

published in HP AI Studio

matt.trainorCommunity Manager

published in HP AI Studio

mikoaroExplorer

asked in HP AI Studio

mikoaroExplorer

asked in HP AI Studio

eimispachecoContributor

asked in HP AI Studio

A

Anonymous

published in HP AI Studio

A

Anonymous

published in Workstations

A

Anonymous

published in Workstations

demo_masterHP Employee

posted in HP AI Studio

David332Explorer

asked in Workstations

demo_masterHP Employee

posted in HP AI Studio

RickJHP Employee

published in HP AI Studio

RickJHP Employee

published in HP AI Studio

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.

Scanning file for viruses.

Sorry, we're still checking this file's contents to make sure it's safe to download. Please try again in a few minutes.

OKThis file cannot be downloaded

Sorry, our virus scanner detected that this file isn't safe to download.

OK