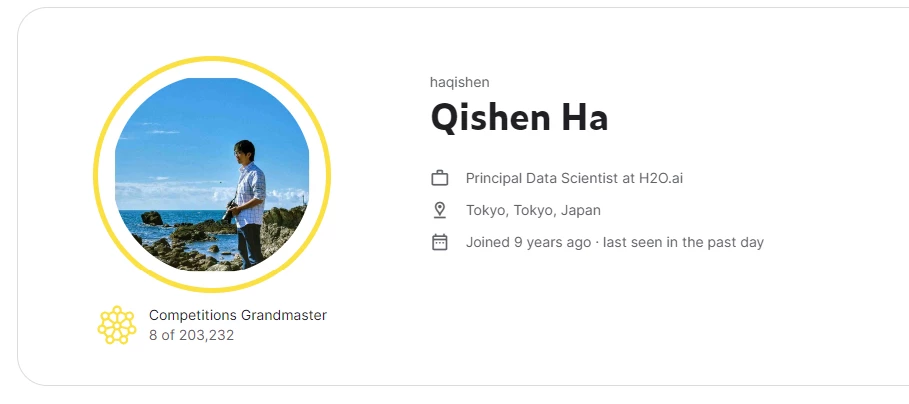

HP Data Science Ambassadors are Kaggle Grandmasters and AI Creator innovators that constantly experiment and share findings.

Qishen Ha, a Kaggle Competitions Grandmaster shared his thoughts on the evolution of Kaggle computer vision competitions, a reflection of lessons learned and best practices.

======================================================================

Reflecting on the Evolution of Computer Vision Competitions on Kaggle: A Personal Perspective

Hello Kaggle Community,

Having participated earnestly in Kaggle competitions for about five years now, I felt compelled to share some thoughts and musings, particularly on the changes I've observed in computer vision contests on Kaggle over the years. My writing may be a bit sporadic as I'm jotting down thoughts as they come, so please bear with me if it seems a bit scattered.

Over the past decade, with the rapid development of computer vision technology—thanks in large part to advancements in hardware—Kaggle's computer vision competitions have flourished and evolved with the times. In just ten years, we've gone from simple image classification challenges to those involving image segmentation, object detection, video processing, multimodal, visual reinforcement learning, generative competitions and so on. The way people participate in competitions has also changed significantly, from initially focusing on trying out the latest papers and extracting key information from the data to a more holistic approach, which includes manually adding new labels to datasets, seeking additional datasets externally, and mining hidden information from test data through submissions.

Firstly, regarding the types of competitions, we can easily notice that not all types of computer vision tasks are suitable for competitions. For example, image classification and object detection are well-suited because the models and metrics are relatively stable, and there is a high correlation between CV score, public LB, and private LB. Efforts to improve the CV score will likely lead to a decent result in the end (TensorFlow Great Barrier Reef, Landmark Recognition 2020). On the other hand, tasks like image generation, which are typically unsuitable for competitions, suffer from the subjective nature of assessing image quality, leaving participants puzzled about how to optimize their models (Generative Dog Images).

Next, let's talk about the changes in participation methods. Early competitors focused more on new technologies. Whenever a new model appeared on arxiv, they would excitedly try it out and often achieved success in many competitions (Bengali.AI Handwritten Grapheme Classification, Jigsaw Unintended Bias in Toxicity Classification, APTOS 2019 Blindness Detection). At that time, the ability to quickly replicate the content of papers significantly influenced the final results. However, for various reasons, we no longer tend to improve competition results by trying out the latest papers. I believe this is due to several factors:

- As deep learning continues to boom, the number of new papers published each year has skyrocketed, leading to a gradual decline in average quality and even instances where fabricated results prevent replication. Consequently, competitors now spend much more time trying new papers with highly unstable returns.

- The broad direction of deep learning development has solidified. In the early years, almost every year saw a new model dominate the ImageNet competition, achieving much higher results than the previous year. Now, designing new models to improve performance has become extremely difficult. Therefore, recent papers have evolved into more niche areas, and the likelihood of these papers being useful in competitions is relatively low.

- In the research field, newer technologies tend to require more computational power, and the computing resources allowed in Kaggle competitions have not increased significantly in recent years. Therefore, even if new methods prove effective in competitions, they face significant challenges in terms of computing power.

Since we can no longer rely on trying out new papers to improve competition results, people have turned to another major direction: data. Some may recall that in the early days, Kaggle was quite strict about the use of external data, requiring open licenses and sharing in the forums, etc., to achieve as much fairness as possible in terms of data. But lately, there seems to be no longer such strict requirements. In the past two years, I've seen many gold medal solutions mentioning that they added new labels to the training set themselves, or introduced external datasets (without sharing in the forums), and nothing happens to them. As a result, more and more competitions have turned into data battles. But often, people really have no choice, such as in medical image competitions. Since medical images are costly to obtain, the amount of data provided by the organizers is usually not enough to train a solid model.

Moreover, with the recent explosion of Large Language Models (LLMs), people's attention has been greatly diverted. It is visible to the naked eye that computer vision technology has entered a stage of gradual development, and I estimate that it will become increasingly difficult to see eye-catching solutions in Kaggle's computer vision competitions in the future.

I look forward to hearing your thoughts on these observations. Have you noticed similar trends, or do you have a different perspective on the future of computer vision competitions on Kaggle?

Best regards,

Qishen

H2O Principal Data Scientist,

Z by HP Global Data Science Ambassador.