Hello everyone! Remember, I posted an announcement about the project we did for HP in Switzerland (above). So, finally I am ready to share the results of our experiments.

Late last year we received a special request from HP: to explore the NPU capabilities of their newest machines: ZBook Power (Intel Core U9, RTX 3000 Ada, 64GB) and the ZBook Firefly (Intel Core U7, RTX A500, 32 GB).

As you know, HP is a global leader in computing and innovation, developing cutting-edge technology for professionals and businesses. They recently introduced the new generations of mobile workstations with NPUs. NPUs are specialized processors designed for AI and machine learning tasks. They are ideal for Natural Language Processing (NLP) and computer vision applications, reducing the load on the CPU and GPU and offering high performance. Despite their potential, many customers and partners struggle to see the benefits of using AI-powered devices or running AI workloads locally instead of in the cloud.

To address this, the team of our students developed an application running local AI models on three different tasks — chatbot, image classification, and image segmentation — to evaluate NPU performance in AI-driven tasks, comparing it with CPUs and GPUs.

The project measures utilization, offloading, elapsed time, latency and throughput across different processing units (CPU, GPU, and NPU). To fully utilize the capabilities of a local AI workstation, the goal was to implement the solution locally, leverage state-of-the-art AI tools, and develop a dashboard to monitor system performance. As part of this effort, the team developed a range of AI tasks including natural language processing with an AI chatbot functionality, and computer vision with image classification, and object detection.

Chatbot

The chatbot is designed specifically for HP users, leveraging large language models (LLM) and retrieval-augmented generation (RAG) to provide answers about the workstations, specs, and recommendations. The chatbot retrieves information from preloaded HP PDFs documents. Unlike cloud-based AI assistants, it runs entirely locally, ensuring complete data privacy.

The self-RAG model with which the chatbot was designed evaluates the relevance of the user’s question against the available source of information. This ensures the generated answer is based on accurate relevant information that matches the question.

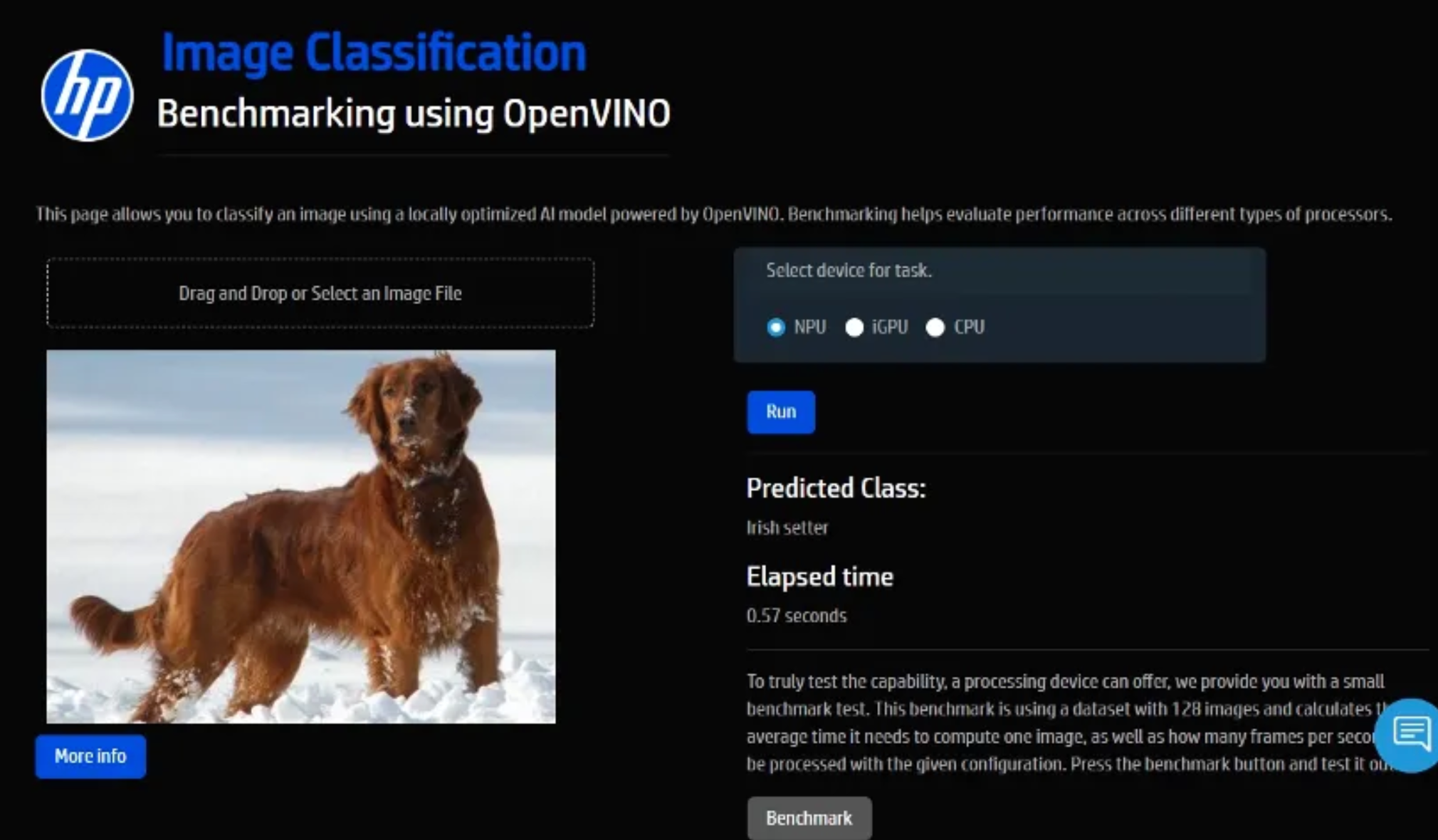

Image classification

Image classification allows a computer to recognize objects in an image and categorize them. For instance, if you upload a picture of a dog, the model predicts the breed.

Going beyond basic image recognition, there are many practical applications in various industries, such as AI-powered quality control in manufacturing, secure on-device document processing for ID verification in finance, enhanced document scanning and automation in smart offices, and X-ray or MRI analysis in medical imaging without relying on the cloud. The key metrics we are looking at here are:

- Elapsed time: Total time from start to result.

- Latency: Time per inference (The process where the AI model makes a prediction, the lower the better).

- Throughput: Number of inferences per second (the higher the better).

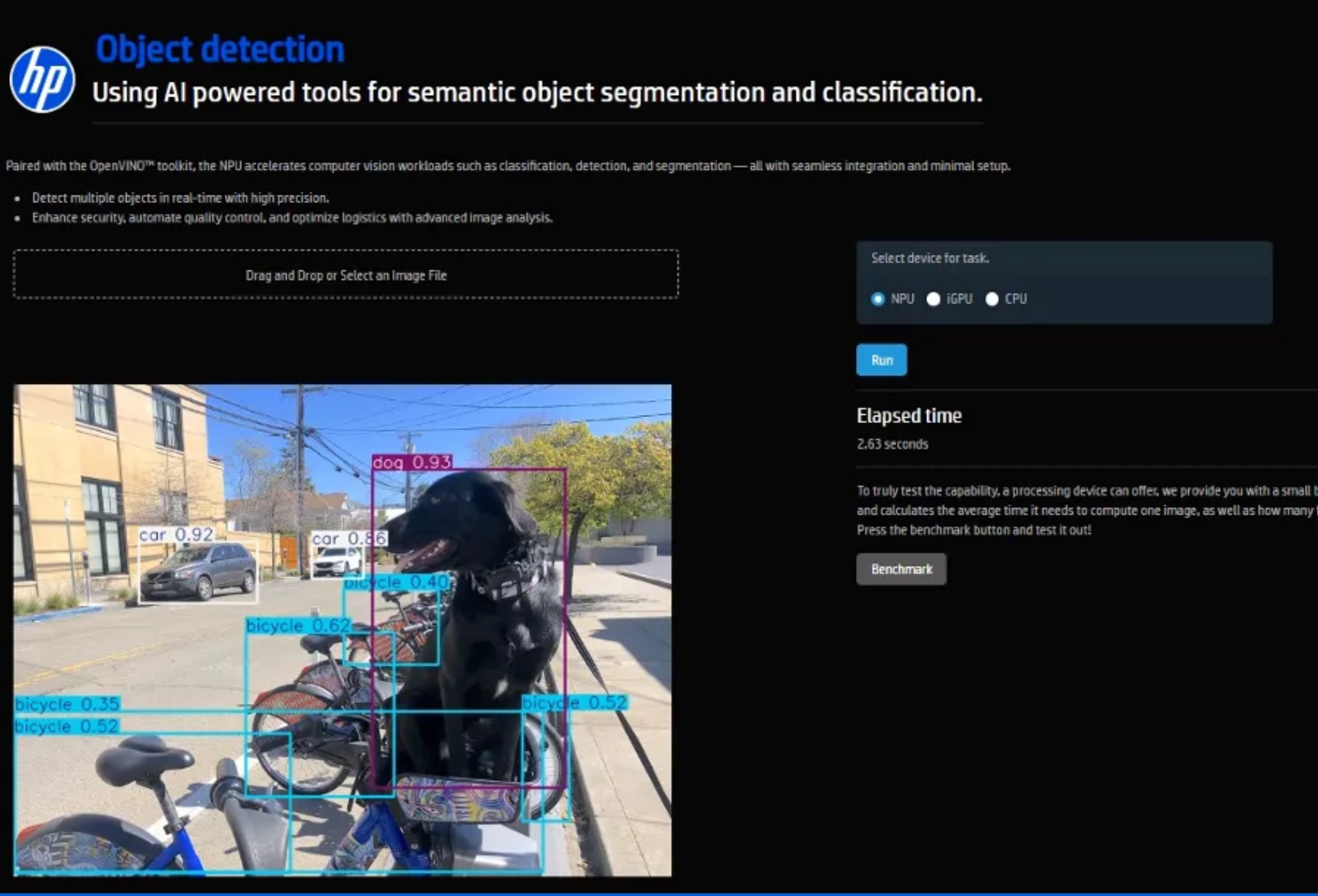

Object detection

Object detection goes beyond classification; it identifies multiple objects within an image. This is a key technology in computer vision applications, such as self-driving cars or security surveillance.

Performance

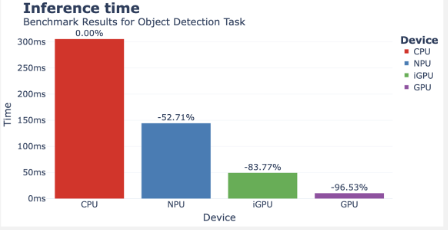

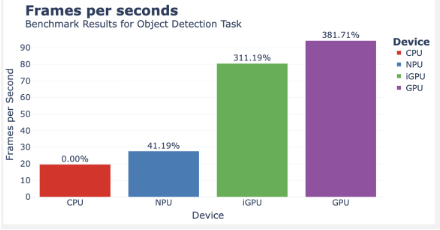

To evaluate performance, the app runs a benchmark test using 128 images, measuring the average processing time per image and the number of frames per second (FPS) where a higher value means smoother real-time detection.

CPU (Central Processing Unit): The CPU is a general-purpose processor designed to handle a wide variety of tasks. While it offers versatility and precision, it typically operates at a slower pace when managing large-scale parallel operations, such as those common in AI workloads.

GPU (Graphics Processing Unit): GPUs are optimized for parallel processing, making them well-suited for breaking down large tasks into smaller, simultaneous computations. This architecture is especially effective for training AI models. However, GPUs are relatively large and consume significantly more power.

NPU (Neural Processing Unit): NPUs are specialized processors built specifically for AI-related tasks. They strike a balance between the flexibility of CPUs and the parallel processing power of GPUs, while maintaining lower energy consumption. NPUs are designed to efficiently offload AI workloads from the CPU and GPU, enabling smoother performance even during complex or multitasking scenarios. This makes them a practical choice for everyday users who need AI capabilities without the high power demands of a GPU.

This project highlights the potential of Intel-powered HP AI PCs to run demanding AI tasks locally, demonstrating the growing viability of edge computing. By integrating a local LLM chatbot with RAG, image classification, object detection, and real-time resource monitoring, the app showcases how CPUs, GPUs, and NPUs perform across various AI workloads.

NPUs, in particular, offer a compelling combination of efficiency, speed, and responsiveness — making advanced AI applications accessible even on lightweight, mobile workstations. For professionals seeking performance without compromising on power consumption or data privacy, NPU-enabled devices represent a future-ready upgrade.

While challenges remain — such as broader software support and improved developer tooling — continued investment and real-world benchmarking will drive adoption. As this project demonstrates, the shift toward AI-powered workflows is well underway, and NPUs are poised to play a key role.

Watch the app in action in this youtube video: Are NPU the future? (start from 8:45).

. . .

Conclusion

Our findings conclude that for AI-efficient tasks CPUs handle general-purpose computations and sequential logic, while GPUs, with their parallel processing capabilities, excel at training large, complex AI models and large-scale inference. NPUs are specialized for highly efficient, low-power AI inference on edge devices, making them ideal for real-time applications where data privacy and minimal latency are critical — for example, optimizing local software like new AI-powered tools in Paint. Ultimately, these units complement each other in a heterogeneous computing environment, optimizing performance and power consumption across the entire AI pipeline from training to deployment.

If you’ve ever tried to program an NPU, you know that this specialization comes with its own unique set of challenges, from navigating specific toolchains to optimizing models for these highly efficient architectures. I’d love to hear about your experiences and the hurdles you encountered. Feel free to share!

Stay tuned for more insights into the exciting world of AI!

This demo was made by our graduates Jan Roesnick, Kristina Liang, Marisa Davis, and Natalia Neamtu with support from Sebastian Gottschalk and Stefanie Wedel. Feel free to contact us if you have any questions or want to ask about the details of the project or request code.

*As a Z by HP Global Data Science Ambassador, I have been provided with HP products to facilitate our innovative work.