Grok-1.5 and Grok-1.5 Vision Pro are Elon Musk’s LLMs. In less than a year, the Grok team have developed, demonstrated, and released strong LLM open source models that aim to avoid censorship. With speed and performance, the team has secured a significant Series B funding round.

Grok-1.5 (announced March 28, 2024) uses multi query attention with long context capability. It is not yet available outside of early testers, but Grok-1 (released March 17, 2024) is open source, and available for commercial use like Llama, but it’s a 314B 8-bit quantized pre-trained model trained on X data from scratch that can augment responses with X data. Grok-1 performs well on logic tasks, where other models can struggle.

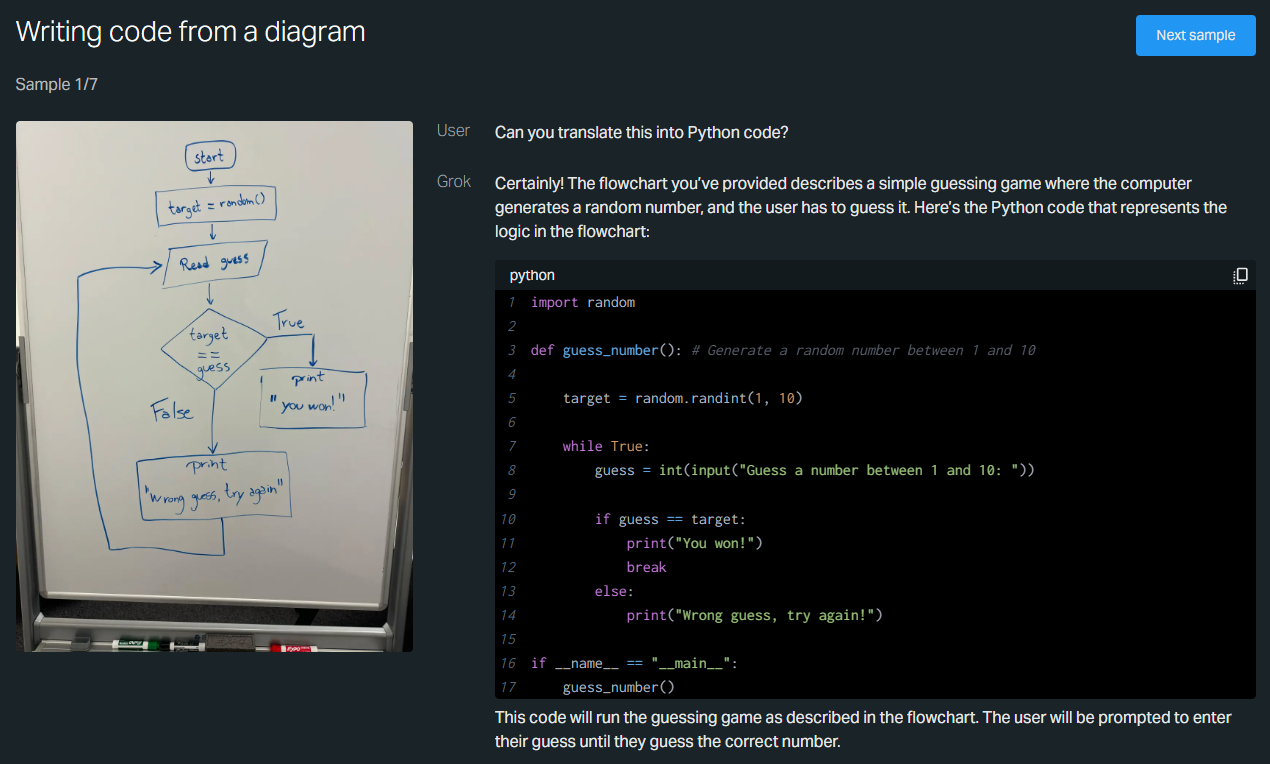

Grok-1.5v (announced April 12, 2024) is a multi-modal model that can process visual information to reason and interpret actions because it understands images. In comparing zero-shot without chain-of-thought prompting Grok-1.5v performed well compared to other pre-trained models.

AI creators are itching to use quantized versions of the pre-trained models as they are computationally expensive, so resorting to private clouds to keep costs down as they experiment with what’s available or limiting cloud compute usage.

Image below from the xAI Grok-1.5v website shows how Grok-1.5v can convert images into code.