The Hidden Challenge of Connecting AI to Your Services

Picture this: You’ve built a fantastic API that powers your application. It’s well-documented, follows REST principles, and serves thousands of requests daily. Now, with AI agents becoming mainstream, you’re excited to make it available to LLMs through the Model Context Protocol (MCP). There’s even a tool that can auto-convert into an MCP server. What could go wrong?

As it turns out, quite a lot.

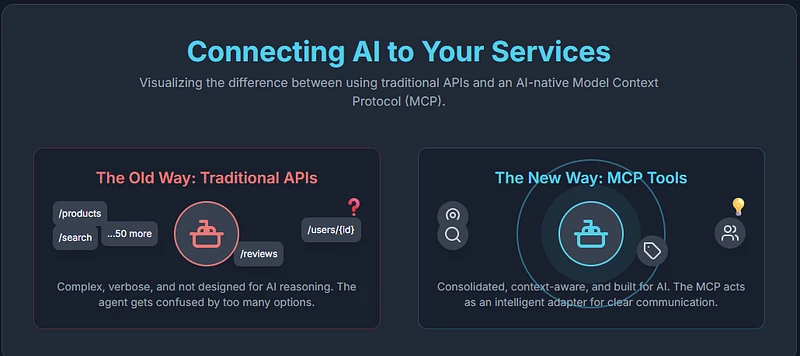

The rush to make existing APIs available to AI agents reveals a fundamental mismatch: APIs designed for traditional software weren’t built with AI’s unique constraints and capabilities in mind. Let’s explore why simply converting your API into an MCP tool might be setting your AI integration up for failure.

Understanding the Players: Agents, Tools, and MCP

Before diving into the problems, let’s establish our terminology. In the AI ecosystem:

- Agents are LLMs that can take actions beyond just generating text

- Tools are the functions these agents can call to interact with external systems

- MCP (Model Context Protocol) is the emerging standard that enables this interaction

Think of MCP as the universal adapter that lets AI agents plug into various services. It’s an elegant solution that promises to transform LLMs from passive text generators into active digital workers. The temptation is obvious: why not quickly expand what AI can do by converting every existing API into an MCP tool?

Problem #1: The Paradox of Too Many Tools

Here’s something counterintuitive: giving an AI agent more tools can actually make it less effective.

Traditional APIs embrace granularity. A typical SaaS product might expose 50–100 endpoints, each handling a specific task. This works beautifully for developers who know exactly which endpoint they need. But AI agents operate differently.

The Cognitive Load Problem

Every tool you give an agent consumes part of its “working memory” (the context window). The agent must:

- Remember what each tool does

- Decide which tool is appropriate for each task

- Keep track of tool descriptions and parameters

VS Code’s Copilot, for instance, caps agent tools at 128 — and even that’s often too many. Models start making mistakes, calling the wrong tools or getting confused about their capabilities.

Real-World Example

Imagine converting a typical e-commerce API:

GET /productsGET /products/{id}GET /products/searchGET /products/categoriesGET /products/{id}/reviewsGET /products/{id}/variants- …and 20 more endpoints

An AI agent now has to navigate this maze of options. A human developer would know to use /products/search for finding items, but the agent might waste time trying /products with filters, or worse, make multiple calls trying to piece together information that a single, well-designed tool could provide.

Problem #2: The Context Window Crisis

If tool proliferation is death by a thousand cuts, data formatting is the guillotine.

The JSON Tax

APIs love JSON. It’s readable, universal, and self-describing. But for AI agents, JSON is incredibly wasteful. Consider this typical API response:

{ "products": [ { "product_id": "12345", "product_name": "Wireless Headphones", "product_description": "High-quality wireless headphones with...", "product_price": 99.99, "product_category": "Electronics", "product_stock": 150, "product_rating": 4.5 }, // ... 99 more products ]}Every field name is repeated for every record. In a response with 100 products, you’re sending “product_” 700 times! This verbosity can consume thousands of tokens — the currency of AI context windows.

The Alternative: Agent-Optimized Formats

Compare that to a CSV representation:

id,name,description,price,category,stock,rating12345,Wireless Headphones,High-quality wireless...,99.99,Electronics,150,4.5...

This format can use 50% fewer tokens while conveying the same information. For agents working within tight context limits, this efficiency is crucial.

The Overflow Problem

Even worse, APIs often return far more data than needed. A single API call returning 100 records with 50 fields each can completely overwhelm an agent’s context window, pushing out important instructions and previous conversation history. It’s like trying to have a conversation while someone dumps an encyclopedia on your desk.

Problem #3: Agents Need Different Superpowers

The most fundamental issue isn’t technical — it’s conceptual. APIs are built for software; agents need tools built for reasoning.

What APIs Provide vs. What Agents Need

Traditional API Design:

- Returns raw data

- Focuses on completeness

- Assumes the consumer knows what to do next

- Optimizes for machine parsing

Agent-Optimized Tool Design:

- Returns contextualized information

- Includes summaries and recommendations

- Suggests logical next steps

- Optimizes for decision-making

A Practical Example

Consider a city information service. A traditional API might offer:

GET /cities?name=SeattleReturns: Full city data (population, coordinates, timezone, etc.)

An agent-optimized tool would provide:

search_city("Seattle")Returns: - Concise data table with key facts- Natural language summary of notable features- Suggested follow-up actions ("Would you like details about Seattle's neighborhoods or climate?")This layered approach plays to AI’s strengths: understanding context, following conversational flows, and making intelligent decisions about what information is most relevant.

The Path Forward: Designing for AI-First

So, should we abandon the idea of exposing APIs to AI agents? Not at all. But we need to be thoughtful about how we do it.

Principle 1: Consolidate and Compose

Instead of exposing every endpoint, create a smaller set of powerful, flexible tools. One “search_products” tool that can handle multiple query types is better than five specialized search endpoints.

Principle 2: Be Context-Aware

Design tools that:

- Allow field projection (return only what’s needed)

- Provide summarization options

- Default to efficient formats

- Include pagination that makes sense for AI consumption

Principle 3: Guide the Journey

Tools should help agents navigate complex workflows. Include metadata about:

- Recommended next actions

- Relationships between tools

- Common usage patterns

Principle 4: Abstract Intelligently

Don’t just expose your database schema through an API wrapper. Think about what the agent is trying to accomplish and design tools that align with those goals.

Looking Ahead: The Evolution of AI Integration

The current wave of “API-to-MCP” conversions is natural growing pain. As the ecosystem matures, we’ll likely see:

- Specialized MCP design patterns emerging for different use cases

- Middleware layers that intelligently translate between APIs and agent needs

- Hybrid approaches where agents generate code for simple API calls but use MCP tools for complex operations

The key insight is this: AI agents aren’t just another API consumer. They’re a fundamentally different type of user with unique strengths and constraints. The sooner we embrace this difference, the sooner we can build tools that truly unleash their potential.

Conclusion: Build for the Future, Not the Past

The temptation to quickly convert existing APIs into MCP tools is understandable. It promises instant AI integration with minimal effort. But as we’ve seen, this approach often creates more problems than it solves.

Instead of asking “How can we make our API work with AI?”, we should ask “What would a tool designed specifically for AI agents look like?” The answer might require more upfront work, but the result will be AI integrations that actually deliver on their promise.

As you consider exposing your services to AI agents, remember: APIs were designed for a world of deterministic software making precise requests. AI agents live in a world of probabilistic reasoning and contextual understanding. They deserve tools built for their reality, not retrofitted from ours.

The future of software isn’t just about AI consuming our APIs — it’s about reimagining how intelligent systems interact with our services. Let’s build that future thoughtfully.