In September, NVIDIA published an article outlining the value of custom SLMs for Agentic AI working on-prem on machines like HP Z workstations.

Key Takeaways:

1. Economics of Small Agentic Models (SLMs):

Small Language Models (under ~10B parameters) can replace generalist LLMs for most agentic tasks. They’re 10–30× cheaper to serve, require fewer GPUs, and can be fine-tuned in hours instead of weeks. This shift lowers operational costs, reduces latency, and improves sustainability—critical as AI agents scale across industries.

2. Localization and Democratization:

SLMs enable local, domain-specific adaptation—they can be easily fine-tuned to meet regional compliance, cultural norms, or specialized workflows. This encourages on-prem and edge deployment, supporting sovereign data control and fostering diversity by allowing more organizations to build their own tailored agents.

3. On-Prem Model Creation and Management:

With frameworks like NVIDIA Dynamo and ChatRTX, real-time, offline inference becomes practical on consumer or enterprise GPUs. Organizations can train, deploy, and manage specialized SLMs locally, ensuring privacy and reducing reliance on centralized cloud APIs.

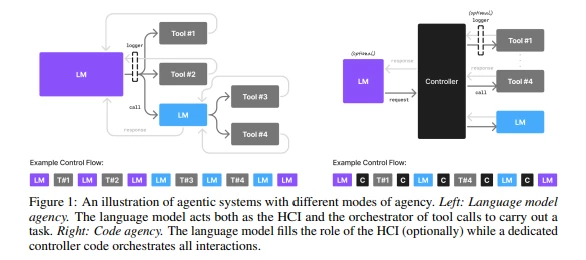

4. Strategic Model Design:

Agentic systems naturally decompose tasks. Using multiple specialized SLMs (“Lego-like” modular architecture) instead of one large monolith allows faster iteration, easier debugging, and better cost control—paving the way for hybrid systems where SLMs handle most tasks and LLMs are invoked only for complex reasoning.

5. Adoption Outlook:

SLM-first design offers clear economic, environmental, and operational advantages that are likely to reshape the agentic AI ecosystem within this decade. Cloud infrastructure is not required to personalize, host, and use SLM Agentic AI.

📚 More at:

-

Small Language Models are the Future of Agentic AI: https://arxiv.org/pdf/2506.02153

-

NVIDIA Research Lab: research.nvidia.com/labs/lpr/slm-agents

-

NVIDIA Dynamo Inference Framework: github.com/ai-dynamo/dynamo