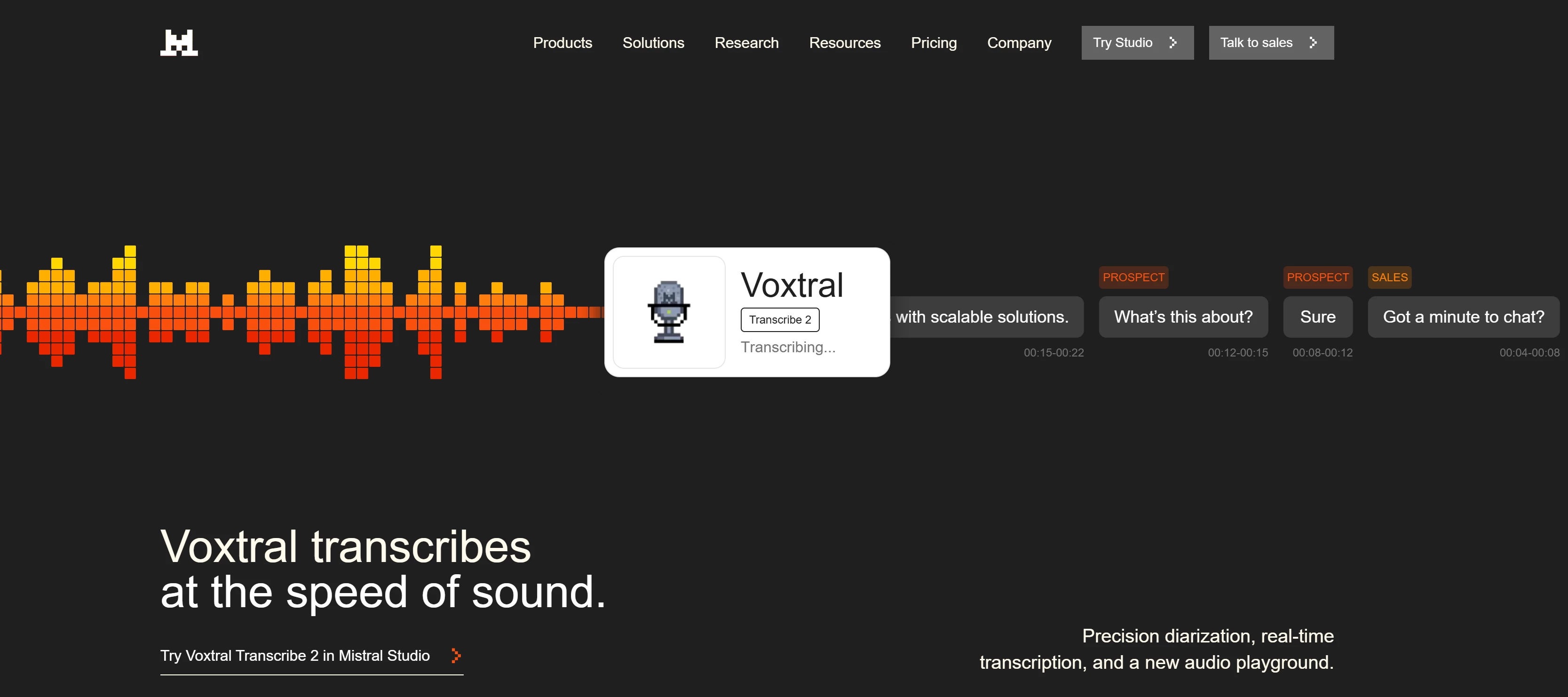

Mistral AI unveiled Voxtral Mini Transcribe V2 and Voxtral Realtime, speech‑to‑text models aimed at enabling near real‑time multilingual transcription and translation with low latency (~200 ms) and support for 13 languages.

At ~4 billion parameters, these models are compact enough to run locally on devices like phones and laptops, enhancing privacy and lowering reliance on cloud services. Voxtral Realtime is open‑source under an Apache 2.0 license and designed for live use cases, while Mini Transcribe V2 excels at batch jobs with diarization and timestamps. Mistral positions these tools as cost‑effective, optimized alternatives to larger proprietary systems from major US tech firms, focusing on efficient model design and dataset quality rather than raw scale.

The models align with Mistral’s broader strategy to offer regulation‑friendly AI solutions that fill niche and specialist roles in the speech AI space.

Top 3 benefits for an AI developer:

• Low‑latency, real‑time multilingual transcription - build conversational apps with ~200 ms turnaround

• Open‑source and on‑device deploying - reduces cost, increases privacy, and customize

• Efficient, compact models - lower infrastructure requirements for speech/translation features