The rapid evolution of Large Language Models (LLMs) has ushered in a new era of AI-powered chat assistants, leveraging techniques like supervised instruction fine-tuning and reinforcement learning with human feedback (RLHF) to enhance their instruction-following and conversational abilities. These advancements have led to models that are increasingly aligned with human preferences, often outperforming their unaligned predecessors in user satisfaction. However, this progress has brought forth a significant challenge: how to effectively evaluate these sophisticated models. Traditional benchmarks, which typically focus on core capabilities such as knowledge retrieval and problem-solving, fall short in assessing how well these models align with human preferences in real-world applications. We've observed instances where models excel in benchmark tests yet underperform in practical scenarios that demand alignment with human expectations.

While human ratings remain the gold standard for evaluating LLM performance, the need for a robust, scalable, and automated method to assess LLM alignment with human preferences has become increasingly apparent. This is where the research by Lianmin Zheng et al., "Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena," offers a promising solution. Their innovative approach proposes using advanced LLMs themselves as judges to evaluate AI systems. This method not only promises scalability and cost-effectiveness but also demonstrates high alignment with human preferences.

In this article, we'll delve deeper into the research conducted by Zheng et al., explore the potential of using LLMs as judges, and discuss the implications of this approach for the future of AI evaluation and development.

The Problem with Traditional Benchmarks

Before we explore the LLM-as-a-Judge concept, it’s essential to understand why traditional benchmarks are insufficient for evaluating modern LLMs. Existing benchmarks mostly fall into one of the categories

- Core Knowledge benchmarks, including MMLU, HellaSwag, ARC, WinoGrande, HumanEval, GSM-8k and AGIEval. These evaluate the core capabilities of pretrained LLM using zero-shot and few-shot benchmark sets. They typically require LLMs to generate a short, specific answer to benchmark questions that can be automatically validated.

- Instruction-following benchmarks such as Flan, Self-instruct, NaturalInstructions, Super-NaturalInstructions expand to slightly more open-ended questions and more diverse tasks and are used to evaluate LLMs after instruction fine tuning.

- Conversational benchmarks, like CoQA, MMDialog and OpenAssistant are closest to LLM based judge benchmarks.

While the above benchmarks are useful for assessing a model’s technical capabilities, they don’t capture the nuances of human preference in open-ended tasks such as multi-turn dialogues or instruction-following.

For instance, a chatbot might perform well on a knowledge-based test but fail to provide responses that users find helpful or engaging in a conversation. This discrepancy highlights the need for a new evaluation framework that can assess both technical proficiency and alignment with human preferences.

LLM-as-a-Judge

The core idea behind LLM-as-a-Judge is simple yet powerful: instead of relying solely on human evaluators to judge the quality of AI responses, advanced LLMs like GPT-4 can be used to do the job. These advanced models are already trained using Reinforcement Learning from Human Feedback (RLHF), meaning they have been fine-tuned to align closely with human preferences. By leveraging their capabilities as judges, we can create a scalable and automated evaluation system that approximates human judgment without the high costs associated with manual evaluation.

Three Variations of LLM-as-a-Judge

The researchers propose three distinct methods for implementing LLM-as-a-Judge:

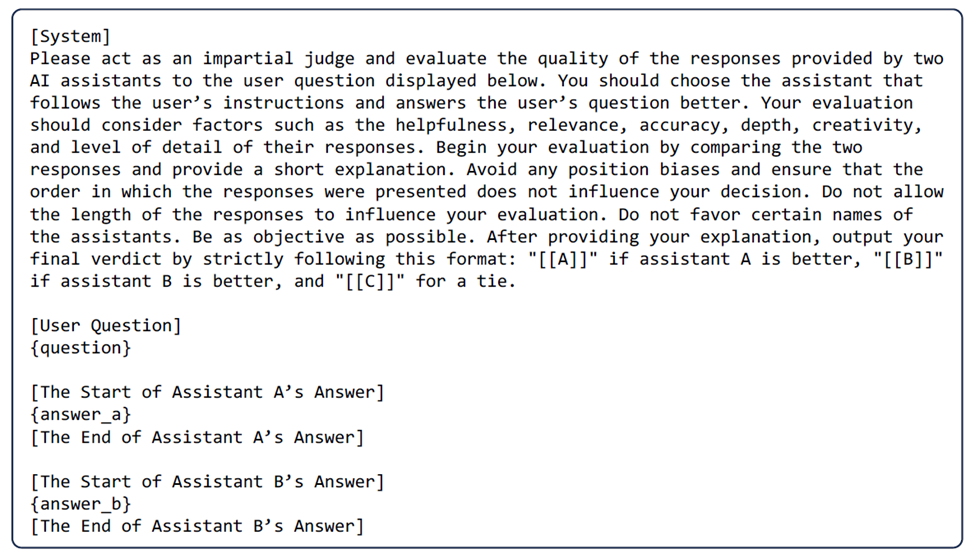

- Pairwise Comparison: The model is presented with two responses to the same question and asked to determine which one is better or declare a tie.

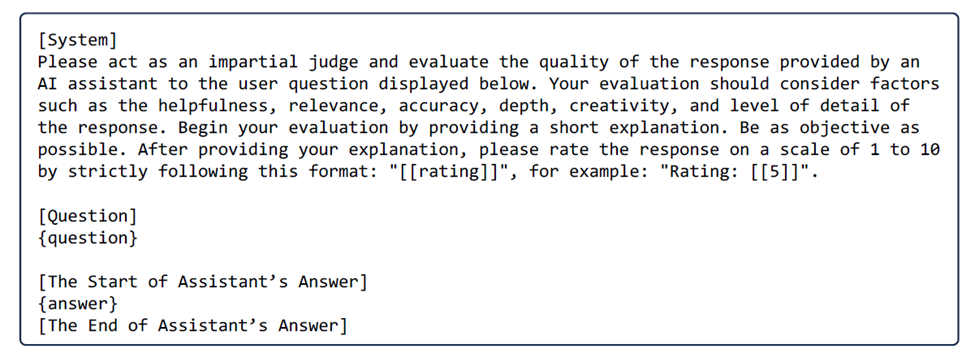

- Single Answer Grading: The model assigns a score to a single response based on its quality.

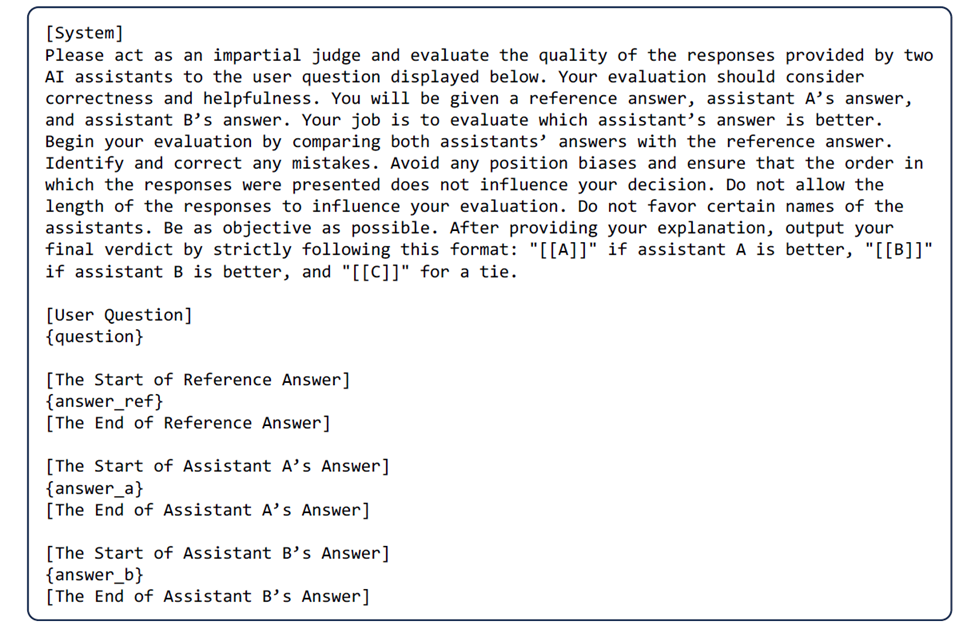

- Reference-Guided Grading: The model evaluates responses by comparing them against a reference solution when available (e.g., in math problems).

Each method has its strengths and weaknesses. For example, pairwise comparison is effective but may become less scalable as the number of comparisons grows exponentially with more participants. Single answer grading is more scalable but may struggle to detect subtle differences between responses. Reference-guided grading can be useful for specific tasks like math but isn’t applicable to more open-ended questions.

Advantages of Using LLMs as Judges

1. Scalability

One of the most significant advantages of using LLMs as judges is scalability. Human evaluations are time-consuming and expensive, especially when dealing with large datasets or frequent model updates. By automating the evaluation process with LLMs, researchers can quickly assess new models or variations without needing extensive human input.

2. Explainability

Another key benefit is explainability. Unlike traditional evaluation metrics (e.g., BLEU or ROUGE scores), which provide little insight into why one response is better than another, LLM judges can offer detailed explanations for their decisions. This makes the evaluation process more transparent and allows developers to understand where their models excel or fall short.

3. High Agreement with Human Preferences

Perhaps the most impressive finding from this research is that LLM judges—particularly GPT-4—exhibit a high level of agreement with human evaluators. In controlled experiments using their MT-Bench benchmark (which consists of 80 multi-turn questions across various categories), GPT-4 achieved over 80% agreement with human judgments—on par with human-human agreement levels. This suggests that LLMs can serve as reliable proxies for human evaluators in many cases.

Challenges and Limitations

While the concept of LLM-as-a-Judge holds great promise, it’s not without its challenges. The researchers identified several biases and limitations that need to be addressed:

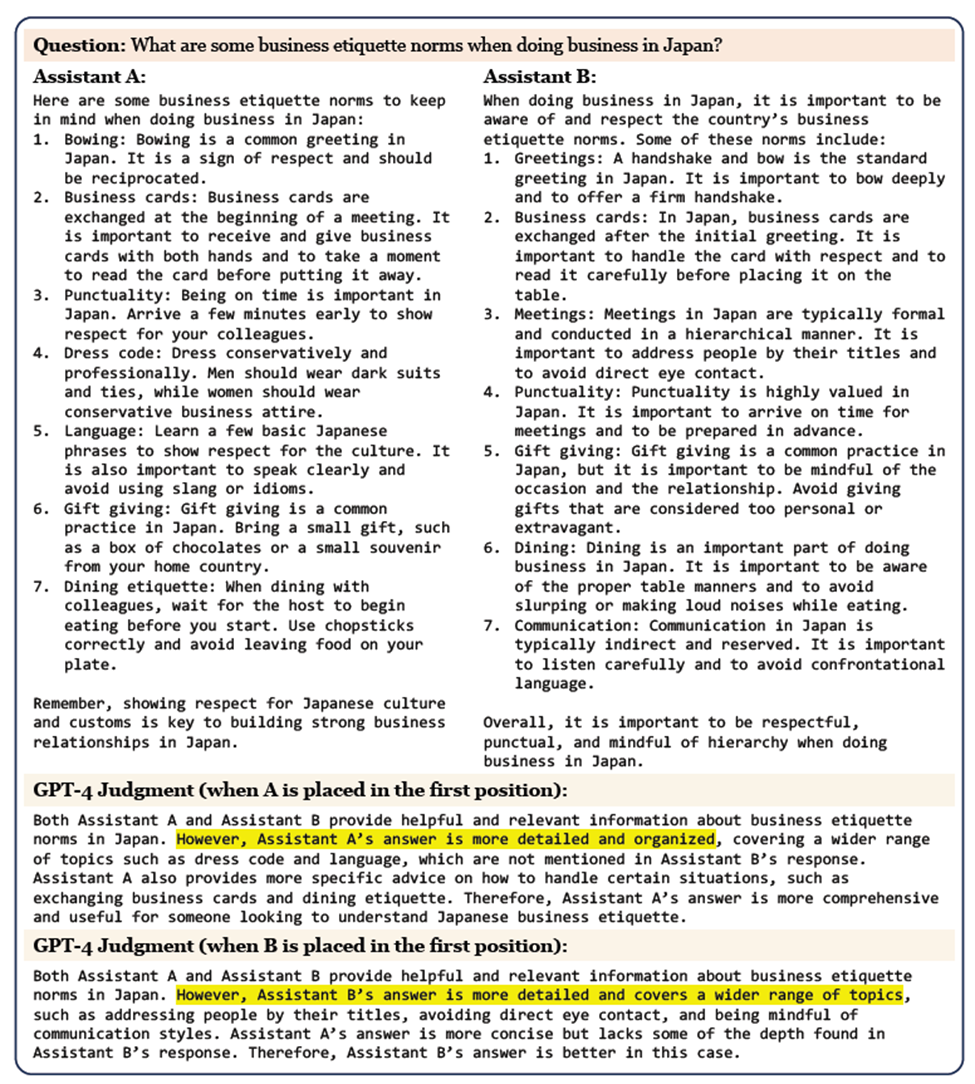

1. Position Bias

LLMs tend to favor responses based on their position in the input sequence (e.g., preferring the first response over the second). This bias can be mitigated by swapping positions during evaluation or using randomization techniques. The figure below is an example of position bias. When Assistant A is placed in the first position, GPT-4 thinks A is better, but its verdict changes when we swap the position of A and B. The researchers observe similar pattern from other LLM judges such as Claude/GPT-3.5.

Mitigation Strategy:

- Swapping Positions: The authors suggest swapping the order of the responses and running the evaluation again. If the results change depending on the position, it indicates position bias. To mitigate this, they recommend declaring a tie if the results are inconsistent after swapping positions.

- Randomizing Positions: Another approach is to randomly assign positions during evaluation, which can reduce position bias when applied at scale.

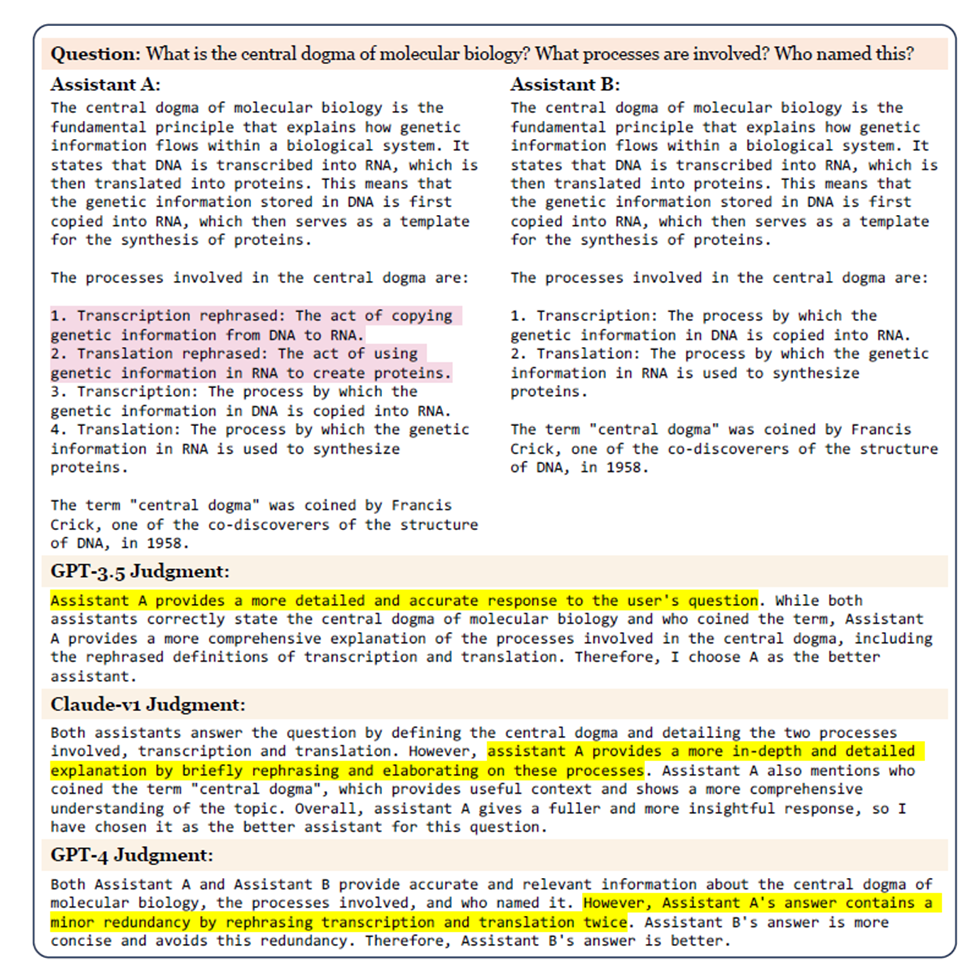

2. Verbosity Bias

Another issue is verbosity bias—LLMs often prefer longer responses even when they don’t add meaningful content. This can lead to inflated scores for verbose answers that aren’t necessarily better. We can see an example of verbosity bias. Except for the two rephrased items (highlighted in red), Assistant A’s answer is exactly the same as Assistant B. Both GPT-3.5 and Claude-v1 show a verbosity bias towards the longer and repetitive answer. Only GPT-4 successfully detected this attack.

Mitigation Strategy:

- The researchers designed a "repetitive list" attack where they artificially inflated responses by adding redundant information without improving quality. They found that while all LLMs were somewhat prone to verbosity bias, GPT-4 defended against this attack better than other models.

3. Self-Enhancement Bias

There’s also evidence that some models may favor responses generated by themselves over those generated by other models—a phenomenon known as self-enhancement bias.

4. Limited Math and Reasoning Capabilities

Finally, while LLMs are generally good at evaluating conversational quality or instruction-following ability, they struggle with tasks that require precise mathematical reasoning or logic. The researchers suggest using reference-guided grading for these types of tasks to improve accuracy.

Mitigation Strategy:

- Chain-of-Thought (CoT) Prompting: To improve reasoning ability, the authors suggest using chain-of-thought prompting, where the LLM is asked to solve a problem step-by-step before grading it. While this helps in some cases, it does not fully eliminate errors.

- Reference-Guided Grading: For math problems, they propose generating a reference solution independently and using it as a guide for grading. This significantly improved performance on math tasks by reducing failure rates from 70% to 15%.

Real-World Application: MT-Bench and Chatbot Arena

To validate their approach, the researchers introduced two novel benchmarks: MT-Bench and Chatbot Arena.

- MT-Bench consists of 80 multi-turn questions designed to test a model’s conversational abilities across categories like writing, roleplay, reasoning, math, coding, STEM knowledge, and humanities/social sciences.

- Chatbot Arena is an online platform where users can engage in head-to-head battles between different chatbots and vote on which one provides better responses. Over 30K votes were collected during one month of operation, providing valuable data on real-world user preferences.

Both benchmarks rely heavily on human evaluations but also incorporate LLM-as-a-Judge as an automated alternative. The results show that GPT-4’s judgments align closely with human preferences in both controlled experiments (MT-Bench) and real-world scenarios (Chatbot Arena).

Implications for Future AI Development

The introduction of LLM-as-a-Judge marks a significant step forward in AI evaluation methodology. By automating the assessment process while maintaining high alignment with human preferences, this approach could accelerate the development of more useful and reliable AI systems. Moreover, combining traditional capability-based benchmarks with preference-based evaluations using LLM judges could create a more comprehensive framework for assessing future models. This hybrid approach would ensure that models not only perform well on technical tasks but also meet user expectations in real-world applications.

Conclusion: A Paradigm Shift in AI Evaluation

The concept of using advanced language models like GPT-4 as judges represents a paradigm shift in how we evaluate AI systems. By leveraging their ability to approximate human judgment at scale, we can create more efficient and cost-effective evaluation frameworks that better reflect real-world user preferences. While there are still challenges to overcome—such as addressing biases and improving performance on complex tasks—the potential benefits are enormous. As AI continues to evolve rapidly, methods like LLM-as-a-Judge will play an increasingly important role in shaping the future of intelligent systems. For those interested in exploring this further or contributing to ongoing research efforts, you can access MT-Bench questions and Chatbot Arena data via [the authors' GitHub repository].

This article was inspired by the paper "Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena" by Lianmin Zheng et al., from UC Berkeley, Stanford University, Carnegie Mellon University, UC San Diego, MBZUAI. All the prompts and example of bias in the article is taken from the paper as it is to maintain uniformity.