At the recent AI Summit conference in London, Alex Klug, Product Sr. Manager of Data Science & AI Solutions at HP, and Yash Sheth, COO & Co-founder of Galileo, discussed the challenges of creating trustworthy generative AI systems, particularly when handling sensitive data. They highlighted the widespread use of generative AI (Gen AI) and the significant issue of "hallucinations," where AI systems produce inaccurate or misleading information. This problem is increasingly concerning for businesses due to potential liability and the need for accuracy in AI applications.

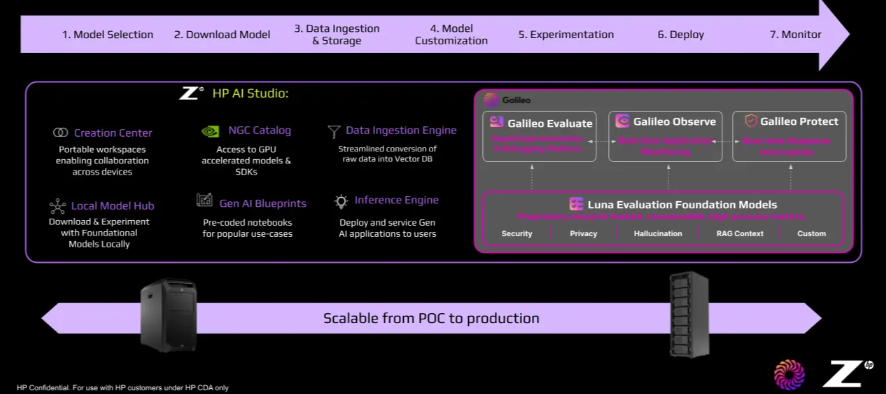

The discussion emphasized the need to rethink the entire development lifecycle to address these challenges, from model selection and procurement to experimentation, deployment, and monitoring. Solutions must ensure that sensitive data is managed securely and reliably while addressing problems such as hallucinations and model drift.

HP’s AI Studio offers secure, local environments for the development and deployment of AI models, complete with tools for collaboration, experimentation, and protection. Complementing this, Galileo’s Luna models provide advanced evaluation and monitoring capabilities for Gen AI systems, focusing on metrics that promote accuracy and reduce hallucinations. Together, these solutions aim to improve the reliability, scalability, and trustworthiness of generative AI technologies.

How do you think these advancements will reshape the future of generative AI and address the growing concerns around its implementation?