At last month's Figma annual conference, Config, several new features were unveiled, including Figma AI. Designers like me have been eagerly awaiting updates from the collaborative design tool giant, especially after their deal with Adobe fell through. The question now is: how will Figma lead the way for creatives to design with AI as a supportive tool? How will it be different from what we’ve seen from existing tools?

The new features are impressive. They include a text translation feature to visualize product designs in different languages and Figma Slides, a natural business and feature decision for Figma as designers are already presenting in Figma or creating slide decks rather than migrating to Google Slides or PowerPoint, as can be seen from the Figma Community templates available. Additionally, a redesign of Dev Mode allows developers to focus on details like new published updates.

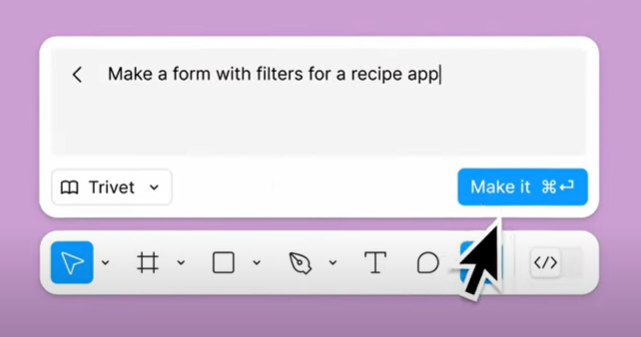

The most significant feature is Figma AI, where users can click a button to "Make a design," enter a prompt, and generate an original design in seconds. Although similar tools exist, Figma's approach uses existing user designs and organizational files to train their models. However, shortly after its release, users found that the feature didn't live up to its promise. When tasked with creating a weather app, the AI repeatedly produced designs that closely resembled the Apple Weather app, leading to the feature being disabled. The variability was too low and not ready for release.

After this, a new question arises. What went wrong and how can we learn from this? How can data scientists, developers, designers, creators, and product managers leverage this example to inform decisions for our AI products? Curious to hear from data scientists and learn from your experiences.